TechTarget News

News from TechTarget's global network of independent journalists. Stay current with the latest news stories. Browse thousands of articles covering hundreds of focused tech and business topics available on TechTarget's platform.Latest News

-

14 May 2025

Australian public cloud spending to hit A$26.6bn in 2025

By Aaron TanGartner forecasts strong growth in cloud infrastructure and platform services even as Australian organisations grapple with scaling AI initiatives and managing rising cloud expenditure

-

14 May 2025

New security paradigm needed for IT/OT convergence

By Aaron TanIndustry leaders and policymakers highlight growing cyber threats from the integration of IT and operational technology systems, calling for collaboration and regulatory frameworks to protect critical systems, among other measures

-

13 May 2025

Microsoft tackles 5 Windows zero-days on May Patch Tuesday

By Tom WalatThe company addresses 72 unique CVEs this month, but several AI features bundled in a larger-than-usual update could bog down some networks.

- Latest news from around the world

All News from the past 365 days

-

13 May 2025

AI copyright suits to be affected by Copyright Office stance

By Makenzie HollandFederal report findings could weaken GenAI vendors' fair use positions in copyright suits.

-

13 May 2025

Surrey Search & Rescue taps Ericsson and UK Connect for critical connectivity

By Joe O’HalloranAdvanced connectivity solution that includes vehicle router and ruggedised devices deployed under SARNET initiative helps search and rescue teams respond faster, improving survival outcomes

-

13 May 2025

M&S forces customer password resets after data breach

By Alex ScroxtonM&S is instructing all its customers to change their account passwords after a significant amount of data was stolen in a DragonForce ransomware attack.

-

13 May 2025

KDDI, DriveNets team to accelerate open network architecture

By Joe O’HalloranStrategic partner to see leading carrier and software-based network services provider promote openness, disaggregation and innovation from the core network to the edge and aggregation layers

-

13 May 2025

Athenahealth says open ecosystem is core to EHR integration

By Brian T. HorowitzIn May Suki became the first ambient AI partner generally available across Athenahealth’s network, but the EHR provider said the key to interoperability is offering several options.

-

13 May 2025

UnitedHealth CEO Andrew Witty steps down

By Jacqueline LaPointeUnitedHealth Group announced the appointment of Stephen J. Hemsley as the company's CEO after Andrew Witty stepped down due to personal reasons.

-

13 May 2025

Sophos rolls out MSP Elevate

By Simon QuickeProgramme for managed service providers aims to support those committed to delivering growth

-

13 May 2025

AWS Marketplace channel partners rev software, service sales

Partners are building a new route to market on AWS Marketplace. One has surpassed $1 billion in total sales and another expects to soon generate half its revenue there.

-

13 May 2025

USAA takes an 'experiment and see' approach with AI

By Esther ShittuThe financial services company experiments with different models and AI tools and technologies. It uses AI technology both internally and externally.

-

13 May 2025

HP expands refurbished PC scheme to cover the UK

By Simon QuickeHP provides a greener option for partners to pitch to customers concerned about sustainability, as well as budgets

-

13 May 2025

O2 upgrades Wembley Stadium connectivity

By Joe O’HalloranUpgrade around major UK sporting arena to see fans gaining access to dedicated distributed antenna system-based 5G standalone network inside the stadium, in addition to small cells and upgraded masts in surrounding area

-

13 May 2025

Private cellular business deployments to reach more than 7,000 by 2030

By Joe O’HalloranResearch finds enhanced security features and neutral host deployments will be central to expediting growth across all sectors for key sector of mobile industry

-

13 May 2025

Controversial Post Office Horizon system could stay until 2033

By Karl FlindersPost Office seeks off-the-shelf Horizon replacement as part of £492m tender that includes ongoing support for Horizon up to 2033

-

13 May 2025

Bytes breaks through GII £2bn barrier

By Simon QuickeChannel player Bytes shares FY numbers that underline its successful ability to emerge strongly through a challenging market

-

13 May 2025

Gov.uk One Login loses certification for digital identity trust framework

By Bryan GlickThe government’s flagship digital identity system has lost its certification against the government’s own digital identity system trust framework

-

13 May 2025

NHS trust cloud plans hampered by Trump tariff uncertainty

Essex NHS wants to move some capacity to the Nutanix cloud, but can’t be certain prices will hold between product selection and when procurement plans gain approval

-

13 May 2025

Evidence reveals Post Office scandal victims short-changed in compensation payouts

By Karl FlindersSubpostmasters who appealed compensation payments have significantly increased ‘worryingly undervalued’ settlements

-

13 May 2025

Australian data breaches hit record high in 2024

By Aaron TanMore than 1,100 data breaches were reported in Australia last year, a 25% jump from 2023, prompting calls for stronger security measures across businesses and government agencies

-

12 May 2025

Nokia looks to light up in-building enterprise connectivity with Aurelis

By Joe O’HalloranFibre-based LAN solution designed to deliver simple, reliable and future-proof local area connectivity for enterprises, using up to 70% less cabling and 40% less power than copper-based technologies

-

12 May 2025

Wi-Fi 7 trials show ‘significant’ performance gains in enterprise environments

By Joe O’HalloranWireless technology consortium reveals industry trials of latest Wi-Fi standard in enterprise scenarios revealing increased throughput, lower latency and enhanced efficiency for high-demand applications

-

12 May 2025

Channel moves: Who’s gone where?

By Simon QuickeMoves of note this week at WatchGuard, Quantum Trilogy, Delinea and Nexer Enterprise Applications

-

12 May 2025

Contempt order worsens Apple's antitrust woes

By Makenzie HollandA federal judge found Apple to be in contempt of an injunction ordering the company to make access to alternative payment options in the company's App Store easier.

-

12 May 2025

2025 MedTech Breakthrough winners for healthcare payments

By Jacqueline LaPointeWaystar, Candid Health and TrustCommerce are among the winners of the 2025 MedTech Breakthrough Awards in the healthcare payments category.

-

12 May 2025

Systemic gaps hinder cell and gene therapy adoption, access

By Alivia Kaylor, MScOver 200 cell and gene therapy treatments could be FDA-approved by 2030, but adoption could lag due to structural barriers, limited infrastructure and centralized access.

-

12 May 2025

Italian bank signs 10-year deal with Google Cloud

By Karl FlindersUniCredit will transform operations through cloud, AI and data analytics technologies from Google Cloud

-

12 May 2025

Limited payer prescription drug coverage pinches patients

By Sara HeathAs payers limit prescription drug coverage, they are increasingly setting up restrictions like prior authorizations and step therapy.

-

12 May 2025

Virgin Media O2, Daisy Group merge to form B2B comms company

By Joe O’HalloranComms provider and IT services company unite to offer digital-first connectivity and managed services

-

12 May 2025

University will ‘pull the plug’ to test Nutanix disaster recovery

By Antony AdsheadUniversity of Reading set to save circa £500,000 and deploy Nutanix NC2 hybrid cloud that will allow failover from main datacentre

-

09 May 2025

News brief: AI security risks highlighted at RSAC 2025

By Sharon SheaCheck out the latest security news from the Informa TechTarget team.

-

09 May 2025

ServiceNow shops share AI copilot results, prep for agents

By Beth PariseauIT ops teams have often already laid the groundwork for generative AI automation with platform consolidation and the previous wave of AIOps tools.

-

09 May 2025

UK broadband hits 2025 target with strong first quarter

By Joe O’HalloranStudy from UK communications regulator finds gigabit broadband on track to become virtually universally available across country by 2023, with the number of full-fibre broadband connections in particular increasing to nine million in past six months

-

09 May 2025

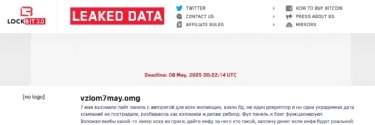

Ransomware: What the LockBit 3.0 data leak reveals

By Valéry Rieß-MarchiveAn administration interface instance for the ransomware franchise's affiliates was attacked on 29 April. Data from its SQL database has been extracted and disclosed

-

09 May 2025

Nutanix platform may benefit from VMware customer unrest

By Tim McCarthyNutanix's Next 2025 conference attendees are jumping ship to the platform after Broadcom's VMware buy, as Nutanix executives plan enterprise-driven evolutions for the platform.

-

09 May 2025

Datadog acquisition gives it feature flagging for AI

By Esther ShittuThe move enables the vendor to integrate the startup's feature flagging software into its products. It is the second acquisition the vendor has made in the past few weeks.

-

09 May 2025

Interview: Amanda Stent, head of AI strategy and research, Bloomberg

By Karl FlindersHallucinating AI, which lies through its teeth, keeps Amanda Stent busy at data analytics and intelligence giant Bloomberg

-

09 May 2025

Broadcom letters demonstrate push to VMware subscriptions

By Cliff SaranThe owner of VMware is reminding customers on perpetual licences that they will longer be able to buy support for their VMware products

-

09 May 2025

Channel catch-up: News in brief

By Simon QuickeDevelopments this week at Assured Data Protection, Acer, Canon, UiPath and Coro

-

09 May 2025

Government calls on tech companies to join crime-cutting campaign

By Lis EvenstadThe justice secretary met with 30 tech companies to discuss how technology can help to tackle prison violence and reduce reoffending rates

-

09 May 2025

Apple to play modest role after datacentre heat breakthrough in Denmark

By Mark BallardCountry at forefront of industrial heat recycling expects datacentres will take only modest role in heating homes after government paved way with widely celebrated law

-

09 May 2025

AvePoint highlights partner contribution to Q1 performance

By Simon QuickeSecurity player AvePoint is on a mission to grow revenue significantly over the next five years, with the channel a vital part of that ambition

-

09 May 2025

Government launches £8.2m plan to encourage girls into AI

By Clare McDonaldThe government is investing in teacher training and student support to get more girls into maths classes, ultimately leading to AI careers

-

09 May 2025

AI training gap puts Europe at a disadvantage

By Cliff SaranForrester study finds that workers in the US are more likely than their European counterparts to have received AI training to support their job roles

-

09 May 2025

Nordic countries plan offline payment system for disaster backup

By Karl FlindersBoard member of the Bank of Finland reveals plan as likelihood of losing internet connectivity increases amid geopolitical tensions

-

08 May 2025

Government will miss cyber resiliency targets, MPs warn

By Alex ScroxtonA Public Accounts Committee report on government cyber resilience finds that the Cabinet Office has been working hard to improve, but is likely to miss targets and needs a fundamentally different approach

-

08 May 2025

Nutanix CEO talks customer challenges and platform updates

By Tim McCarthyMany customers are still looking for a VMware exit and need a modernized platform, Nutanix President and CEO Rajiv Ramaswami says in this Q&A with Informa TechTarget.

-

08 May 2025

Preparing for post-quantum computing will be more difficult than the millennium bug

By Bill GoodwinThe job of getting the UK ready for post-quantum computing will be at least as difficult as the Y2K problem, says National Cyber Security Centre CTO Ollie Whitehouse

-

08 May 2025

Trump targets AI diffusion rule as big tech talks AI race

By Makenzie HollandPresident Donald Trump will walk back a Biden-era rule restricting the sale of advanced U.S. AI chips and models in the month it's set to take effect.

-

08 May 2025

US tells CNI orgs to stop connecting OT kit to the web

By Alex ScroxtonUS authorities have released guidance for owners of critical national infrastructure in the face of an undisclosed number of cyber incidents

-

08 May 2025

UK Digital Services Tax survives US trade negotiations

By Alex ScroxtonThe UK will retain its Digital Services Tax on tech giants in a landmark US trade agreement that paves the way for a future digital tech deal

-

08 May 2025

Virtual pulmonary rehab effective for high-need COPD patients

By Anuja VaidyaNew research shows that virtual pulmonary rehab is similarly effective for COPD patients who need oxygen as those who do not, offering an individualized approach to rehab.

-

08 May 2025

Nutanix opens up to all external storage

By Antony AdsheadCEO Rajiv Ramaswami says Nutanix will open its platform to all external storage, allowing it to profit from customers wanting to move away from VMware, as well as the hyper-converged curious

-

08 May 2025

SAP sales tactic fuels IT disconnect

By Cliff SaranBusiness heads are being targeted by SAP as it pushes out its Rise cloud ERP system

-

08 May 2025

Climb increases AI support

By Simon QuickeDistributor looks to guide partners from start to selling to get more comfortable pitching artificial intelligence offerings

-

08 May 2025

NHS launches digital tool to improve cancer care

By Lis EvenstadCancer 360 tool aims to bring together data on cancer patients in one place, allowing clinicians access to all the information through the Federated Data Platform

-

08 May 2025

Gartner shines light on B2B buyer challenges

By Simon QuickeAnalyst firm Gartner reveals conflicts across numerous stakeholders and an undermining of digital transformation due to lack of staff knowledge

-

08 May 2025

AI adoption: AWS addresses the skills barrier holding back enterprises

By Caroline DonnellyThe AWS Summit in London saw the public cloud giant appoint itself to take on the task of skilling up hundreds of thousands of UK people in using AI technologies

-

08 May 2025

Leading European telcos call for exclusive access to 6GHz band

By Joe O’HalloranOpen letter from Europe’s leading telcos demands exclusive access to key wireless spectrum band for the benefit of Europe’s economy and to ensure successful roll-out of 6G, underpinning digital societies

-

08 May 2025

Girls more concerned about AI bias than boys

By Clare McDonaldWhen asked their opinions on the growing use of AI, girls expressed concerns about possible biases it will perpetuate, while boys were worried about cyber security

-

08 May 2025

Oracle NetSuite’s Goldberg: Autonomous AI next applications phase

By Brian McKennaSpeaking with Computer Weekly at SuiteConnect 2025 in London, Evan Goldberg, executive vice-president of Oracle NetSuite, discussed how artificial intelligence in ERP is evolving

-

08 May 2025

Data streaming matures, cultural shift is key

By Stephen WithersConfluent’s Tim Berglund warns that a cultural transformation towards creating valued data products is vital even as the technology for data streaming is maturing

-

08 May 2025

UK government websites to replace passwords with secure passkeys

By Bill GoodwinGovernment websites are to replace difficult-to-remember passwords with highly secure passkeys that will protect against phishing and cyber attackers

-

07 May 2025

ServiceNow digs deeper into data for AI with Data.world buy

By Beth PariseauServiceNow will fold in metadata collection and data catalog tools from the data governance specialist to fuel its agentic AI ambitions.

-

07 May 2025

AI model boosts delirium detection rate in hospitals

By Anuja VaidyaResearch shows that using a new AI model resulted in a four-fold increase in the rate of delirium detection among hospitalized patients, enhancing delirium treatment.

-

07 May 2025

Meta awarded $167m in court battle with spyware mercenaries

By Alex ScroxtonWhatsApp owner Meta is awarded millions of dollars in damages and compensation after its service was exploited by users of mercenary spyware developer NSO’s infamous Pegasus mobile malware

-

07 May 2025

Europe leads shift from cyber security ‘headcount gap’ to skills-based hiring

By Kim LoohuisResearch from Sans Institute reveals European organisations are leading a global shift in hiring priorities, driven by regional regulatory frameworks

-

07 May 2025

Chaos spreads at Co-op and M&S following DragonForce attacks

By Alex ScroxtonNo end is yet in sight for UK retailers subjected to apparent ransomware attacks

-

07 May 2025

DOJ sues Aetna, other major MA insurers over alleged kickbacks

By Jacqueline LaPointeThe Department of Justice accuses MA insurers Aetna, Elevance Health and Humana of paying kickbacks to brokers for enrollments and discrimination against disabled beneficiaries.

-

07 May 2025

Oxford Uni adds cyber resilience module to MBA programme

By Alex ScroxtonOxford University’s Saïd Business School is working with cyber response specialist Sygnia to help future business leaders get on top of security

-

07 May 2025

NIST Privacy Framework receives draft update

By Jill McKeonA draft update to the NIST Privacy Framework aims to streamline the document and align it with the NIST Cybersecurity Framework.

-

07 May 2025

UK at risk of Russian cyber and physical attacks as Ukraine seeks peace deal

By Bill GoodwinUK cyber security chief warns of ‘direct connection’ between Russian cyber attacks and physical threats to the UK

-

07 May 2025

SAS update targets responsible agentic AI development

By Eric AvidonThe longtime independent analytics vendor's latest Viya platform update includes Intelligent Decisioning, a set of governance-infused capabilities for developing agents.

-

07 May 2025

Nutanix expands storage, Kubernetes and AI platforms at Next

By Tim McCarthyThe latest capabilities and partnerships highlighted at Nutanix's Next conference expand disaggregated storage to Pure Storage, more Kubernetes portability and new AI partnerships.

-

07 May 2025

Nutanix breaks the bounds of HCI again with Pure Storage linkup

By Antony AdsheadHyper-converged infrastructure pioneer adds external Pure Storage arrays in a move that it touts as a way for customers to get off VMware, but which also helps them scale for AI

-

07 May 2025

Nutanix escapes the datacentre with Cloud Native AOS

By Antony AdsheadHyper-converged infrastructure provider offers its operating system independently of a hypervisor to allow containerised apps to run at the edge or on Kubernetes runtimes in the Amazon cloud

-

07 May 2025

Global broadband growth slows at end of 2024

By Joe O’HalloranFourth quarter 2024 update of global connectivity market shows subscribers pass 1.5 billion barrier as fibre, non-terrestrial and fixed wireless gain popularity, especially in emerging markets

-

07 May 2025

Redcentric shares trading update and welcomes fresh CEO

By Simon QuickeManaged service player reveals how its latest fiscal year has shaped up as it welcomes an experienced industry player to run the business

-

07 May 2025

Nokia supplies 1.2Tbps backbone in Pakistan and private wireless to Maersk fleet

By Joe O’HalloranComms tech provider lands large-scale contracts for optical fixed network in Pakistan and IoT connectivity platform with mobile network to enhance operational efficiency at shipping firm

-

07 May 2025

Schools pilot exam results app that could save £30m

By Karl FlindersExam results and certificates will be provided digitally in a pilot the government hopes will lead to huge financial savings

-

07 May 2025

Evolve IP combines tech and sales support function

By Simon QuickeComms player makes move to streamline assistance it can provide to its reseller base

-

07 May 2025

UK hands Indian IT suppliers competitive boost in trade deal

By Karl FlindersTrade deal will exempt IT workers from India from paying National Insurance contributions for three years

-

07 May 2025

Cisco lays out plans for networking in era of quantum computing

By Cliff SaranThe network equipment provider has opened a new lab and developed a prototype chip as it fleshes out its quantum networking strategy

-

07 May 2025

Datacentre outages decreasing in frequency, Uptime Institute Intelligence data shows

By Caroline DonnellyDatacentre outages are becoming less common and severe, but power supply issues remain enduring cause of most downtime incidents

-

07 May 2025

DSIT aims to bolster expertise with year-long secondments

By Cliff SaranTo drive forward its Plan for Change, the Labour government is looking to hire 25 experts for the Department for Science, Innovation and Technology Fellowship programme

-

07 May 2025

Bill Gates hails ‘stunning’ AI progress, urges focus on global equality

By Aaron TanThe Microsoft co-founder underscored AI’s transformative potential in healthcare, education and agriculture while highlighting the need to ensure its benefits reach lower-income nations

-

07 May 2025

DCC signs contracts for major smart meter network upgrade

By Joe O’HalloranUK energy and utilities data company inks major contract with industry partners in IT and communications sector to enable modernisation of Data Service Provider platform

-

07 May 2025

UK critical systems at risk from ‘digital divide’ created by AI threats

By Bill GoodwinGCHQ’s National Cyber Security Centre warns that a growing ‘digital divide’ between organisations that can keep pace with AI-enabled threats and those that cannot is set to heighten the UK's overall cyber risk

-

07 May 2025

ServiceNow K25: McDermott vaunts agentic AI platform as revolutionary

By Brian McKennaAt its Knowledge 25 customer and partner conference in Las Vegas, ServiceNow painted an orchestrated agentic AI future for its Now platform and targeted CRM as prime field for growth

-

07 May 2025

Latest Neo4j release aims to simplify graph technology

By Eric AvidonPrebuilt algorithms that reduce the complexity of queries and connectivity to any data source are designed to make graph-based analysis accessible to a broader audience.

-

06 May 2025

IBM customers assess the performance of AI agents

By Esther ShittuEnterprises are carefully watching the popular technology. While some see agents as too immature relative to what vendors promise, others are slowly integrating them.

-

06 May 2025

Epicor ERP adds agentic AI capabilities for supply chain

By Jim O'DonnellEpicor Prism's new agentic AI use cases aim to make supply chain processes more efficient and productive for its manufacturing customers.

-

06 May 2025

Government industrial strategy will back cyber tech in drive for economic growth

By Bill GoodwinWith over 2,000 cyber security businesses across the UK, the government plans to target cyber as a priority to grow the economy

-

06 May 2025

Staying nonprofit could slow, not stop, OpenAI fundraising

By Shaun SutnerThe GenAI vendor could find itself slowing down after its decision to retain a nonprofit ownership structure, at least for now.

-

06 May 2025

Medicaid work requirements slated to cost the U.S. jobs

By Sara HeathNational Medicaid work requirements could cost the U.S. up to 449,000 jobs and billions in GDP.

-

06 May 2025

ServiceNow expands AI governance, emphasizes ROI

By Beth PariseauEnterprises remain nervous about deploying AI agents in production, as IT vendors vie to demonstrate the value and comprehensiveness of their platforms.

-

06 May 2025

Options for Google as DoJ seeks divestiture

By Cliff SaranThe US Department of Justice is calling for Google to divest its adtech business. Google says it’s impossible. But is there an alternative?

-

06 May 2025

ServiceNow rolls into Salesforce territory with CRM agentic AI

By Don FluckingerServiceNow makes its pitch to be a CRM, adding CPQ and order management features.

-

06 May 2025

Health equity incentive in P4P model needs adjusting

By Jacqueline LaPointeA research letter examining the use of a health equity incentive in the End-Stage Renal Disease Treatment Choices Model points to further adjustments in pay-for-performance models.

-

06 May 2025

FDA reviews oral GLP-1 semaglutide for obesity

By Alivia Kaylor, MScNovo Nordisk is pursuing FDA approval for the first oral GLP-1 semaglutide, aiming to expand Wegovy's indication for chronic obesity and weight management.

-

06 May 2025

Belfast Digital Twin Centre to fast track industrial adoption of deep tech

By Joe O’HalloranTech centre designed to create conditions for digital twins to be better understood, more easily developed and more meaningfully applied, giving businesses real-time capabilities to make smarter decisions

-

06 May 2025

Fintech summit reveals what’s next for sector

By Karl FlindersThe UK’s fintech sector attracted $3.6bn investment last year, which was only bettered by the US, but how will UK fintech continue to grow?