What is continuous data protection (CDP)?

Continuous data protection (CDP), also known as continuous backup, is a backup and recovery storage system in which all the data in an enterprise is backed up whenever any change is made. In effect, CDP creates an electronic journal of complete storage snapshots, one storage snapshot for every instant in time that data modification occurs.

CDP preserves a record of every transaction that takes place in the enterprise. This is helpful if the system becomes infected with a virus or other malware, such as ransomware, or if a file becomes mutilated or corrupted and the problem isn't discovered right away. CDP makes it possible to recover the most recent clean copy of the affected file. It essentially steps back through the record of transactions to restore a file to a previous state or point in time.

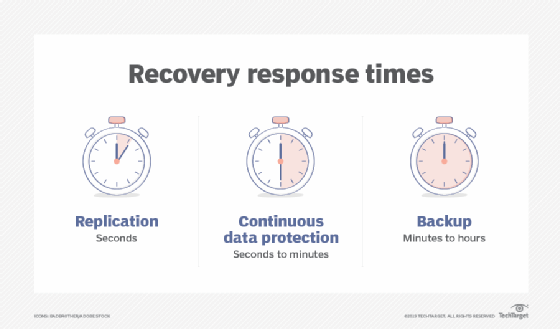

A CDP backup system with disk storage offers almost real-time data recovery. Recovery happens in a matter of seconds -- much less time than traditional tape-based backups or archives. CDP systems are a common addition to enterprise storage infrastructure. Installation of CDP hardware and programming is straightforward and doesn't put existing data at risk.

How does continuous data protection work?

CDP was originally introduced as a mechanism to circumvent the problem of shrinking backup windows. Prior to the introduction of continuous data protection software, most organizations performed a regular system backup to disk or tape. However, many organizations found themselves having to protect a constantly growing data set in a strict backup window. There are several techniques for expediting tape backups, but there's a limit to the amount of data that can be backed up in a given period.

To solve this problem, CDP software transitioned from full tape-based backup to partial, or only-what-has-changed, disk-based backup. Disk-based backup has the added benefits of overcoming tape capacity limitations and reducing the amount of time required for data restorations.

CDP creates an initial data copy to a protection server, usually residing in the organization's own data center. It then uses changed block tracking to back up the storage blocks that have been modified or newly created since the previous backup. This is also known as the delta or change. This approach minimizes the amount of data that must be backed up in each cycle and effectively eliminates the backup window. As such, backups occur every few minutes, as opposed to once per night.

Although there are exceptions, most modern CDP platforms create incremental forever backups. Once an initial full backup is written to physical disk storage, there's no need to back up the data again. Instead, only modified or newly created storage blocks are backed up.

This approach makes it easy to perform a bulk or granular recovery of data as it existed at a previous point in time. However, in practice, CDP backups are periodically reconciled to a new full backup. This prevents the chain of changes from becoming long and reduces the risk of corruption within the change records, which would prevent proper restorations.

To maintain business continuity, organizations must be able to create offsite backups. CDP servers generally reside in an organization's data center. However, most CDP systems can create secondary tape backups or replicate backups to the cloud or a backup data center. That way, if something were to happen to the organization's primary backup and recovery server, a secondary backup copy exists elsewhere that can be used for disaster recovery purposes.

What type of data do CDPs protect?

CDP systems can support any type of enterprise data, but are commonly used to protect the following:

- System files, such as server operating systems and configurations.

- Application files or the programs that the enterprise uses.

- Application data or the information applications create and use.

- System management data, such as server and platform logs and metrics collection.

- Database and data management systems and files.

Data protection technologies, including CDP and more traditional backup methods, are designed to guard long-lived or business-critical data that might be needed for months or even years. CDP isn't well-suited to short-lived data types or use cases where data changes frequently. It's also not useful with data that becomes obsolete quickly, such as internet of things data, or carries little tangible business value, such as machine learning training data sets. CDP is used where needed to protect specific valuable business apps and data assets with quick recovery.

How to implement CDP

CDP can be implemented in several environments, most commonly in virtual machine (VM) and cloud-based environments.

Virtual machines

In virtualized environments, CDP plays a critical role in protecting VMware and VMs. It helps organizations minimize data loss when even seconds or minutes of downtime are unacceptable. CDP uses VMware vSphere application programming interfaces for input/output filtering. It reads and processes I/O operations in transit between protected VMs and their underlying datastore, eliminating the need for snapshots. This approach allows for a near-zero recovery point objective (RPO), ensuring almost no data loss.

To implement CDP for VMs, organizations need to do the following:

- Install the CDP filter on version 6.7 or later of the ESXi host.

- Configure required backup infrastructure components.

- Create a CDP policy.

- Assign the policy to VMs.

Cloud-based

CDP has gained importance in the cloud as more businesses adopt cloud environments. CDP can be implemented in both a hybrid cloud and multi-cloud environments. Hybrid cloud CDP combines on-premises and cloud storage. This enables rapid local recovery and off-site disaster recovery. With multi-cloud CDP, organizations can replicate data across multiple cloud providers for redundancy and disaster recovery capabilities.

Cloud-based CDP offers several advantages:

- Simplified data protection without the need for physical backups.

- Improved disaster recovery capabilities.

- Enhanced data monitoring for compliance purposes.

CDP implementation best practices

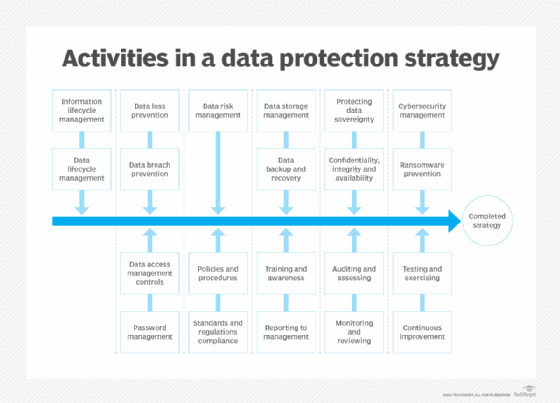

To ensure effective CDP implementation in modern IT environments, organizations should follow these best practices:

- Automate data security processes through automated security compliance checks, regulatory baseline scanning and infrastructure coding.

- Encrypt all data at rest and in transit, including full disk/partition encryption and hardware encryption.

- Implement access controls to ensure only authorized personnel have access to critical data.

- Implement regular security training to reduce human error and the risk of phishing attacks.

- Use updated security software, such as antivirus and antimalware.

- Implement multifactor authentication to gain access to critical systems.

- Regularly update and patch systems for both on-premises and cloud services to address vulnerabilities.

What are the benefits and drawbacks of continuous data backup?

There are both advantages and disadvantages to using CDP. In most cases, the advantages far outweigh the disadvantages.

Advantages

- CDP backups eliminate the need for a backup window.

- CDP backup servers are generally scalable and don't have the capacity limits of tape-based backup.

- Disk isn't a linear medium, so it's usually possible to restore data faster than with a tape system.

- CDP systems enable point-in-time recoveries without needing to retrieve a tape from offsite storage.

- Many CDP platforms run a VM on the backup server and can perform instant VM recoveries while a traditional restoration occurs in the background.

- CDP supports file and data version control, letting a business roll back a file or data to a previous version or state as needed.

Disadvantages

- CDP storage and software or subsystems can be costly for smaller organizations.

- If not properly architected, a CDP backup server can become a single point of failure, such as corruption in the delta chain.

- The systems benefit from high-availability deployment techniques, but this also adds to the cost and complexity.

- CDP drives more storage traffic, which can stress networks and storage systems.

Why is CDP important to businesses?

CDP is important to businesses for the following reasons:

- Minimizes data loss, including data that's critical for an organization's competitive advantage and if breached costs businesses an average of $4.8 million per incident.

- Reduces downtime and maintains business continuity by providing quick recovery and up-to-date backups.

- Supports compliance with regulations such as the General Data Protection Regulation and Health Insurance Portability and Accountability Act.

- Enhances security and minimizes risk of data corruption with frequent snapshots and backups.

How does near-CDP compare to true CDP?

The primary difference between CDP and near-continuous backup is the RPO. True CDP systems guarantee that all newly created data is backed up. These systems, which tend to be designed for protecting structured data, are more demanding, costly and complex than near-continuous backup platforms. They're used in financial services and other industries that must guarantee the protection of all data in real time.

When most people use the term continuous data protection, they're usually referring to near-continuous backup platforms. Rather than performing instantaneous backups as a true CDP platform does, near-continuous backup platforms perform block-level backups on a scheduled basis. The frequency of these scheduled backups varies based on the platform, but most have an RPO in the range of 30 seconds to 15 minutes.

Near-CDP is less demanding on network functionality, such as bandwidth and latency. Organizations can use near-CDP to protect more workloads, but those workloads must be tolerant of some potential data loss. As with many IT systems, it's important for business and IT leaders to match the right technology to their business needs.

CDP vs. disk mirroring

A mirror backup, like any full backup, requires a lot of storage capacity. Disk mirroring, also known as RAID 1, provides full data replication to two or more disks in real time, so if one drive fails, the organization can use the redundant mirror copy immediately with no data loss. Before the advent of cloud storage, small and medium-sized businesses running only one server and a handful of laptops were less likely to implement CDP because of its cost and complexity.

CDP vs. traditional backup

CDP effectively solves the biggest challenges associated with traditional backups. Most notably, it eliminates the backup window. Whereas traditional backup solutions often back up data at the file level, CDP is a block-level technology. As such, it immediately backs up any new or modified storage block data. This eliminates the need for a nightly backup window.

CDP also helps address traditional backup challenges by reducing the RPO. A traditional nightly backup occurs once every 24 hours, and any data created since the most recent backup is subject to loss. If an organization's nightly backup completes at midnight, and there's a data loss event at noon, then any data created between midnight and noon will be lost. In contrast, CDP platforms back up data almost immediately. That way, an organization should never lose more than a few minutes of data -- or none if the business implements true CDP.

Examine seven data archiving best practices that storage administrators should follow to ensure backup and retention policies protect an organization's data.